Reinforcement learning (RL) is one of the most popular machine learning paradigms. RL is indispensable for teaching machines how to operate in constantly changing environments, such as games, VR, or even the real world.

In this article, we will explore the learning processes, key algorithms, and practical applications that make RL a transformative force in the field of ML.

Defining reinforcement learning

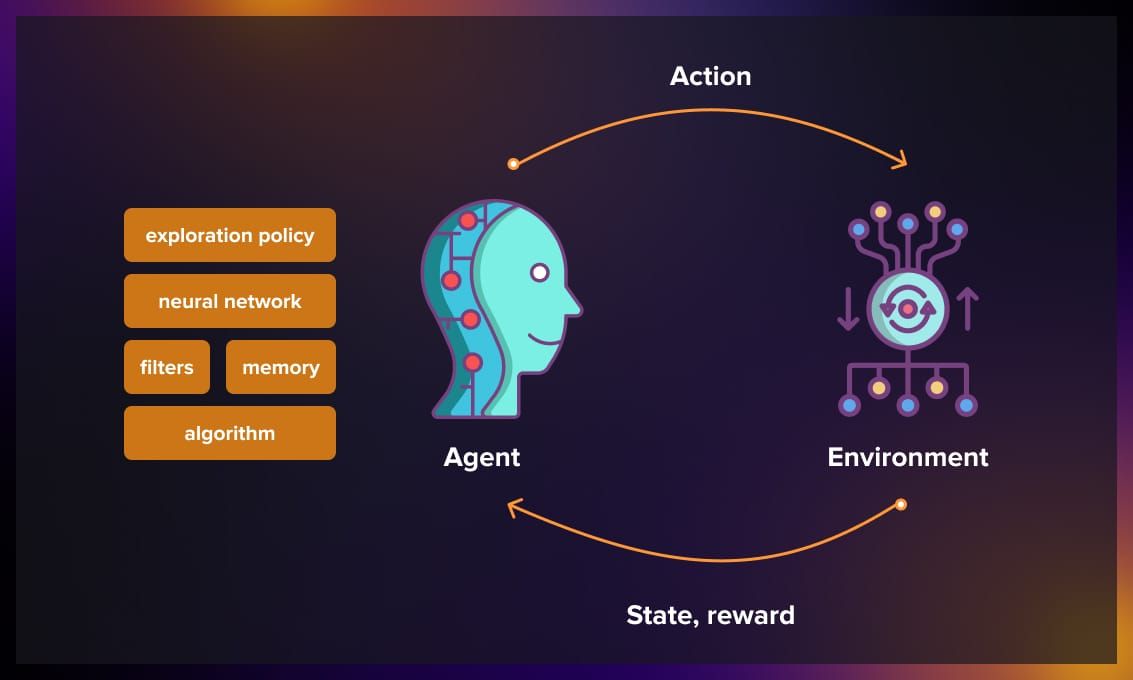

Reinforcement learning is a subset of machine learning where an algorithm, referred to as an agent, learns to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties based on the actions it takes, allowing it to iteratively improve its decision-making capabilities over time.

Key components of reinforcement learning

Unlike other ML methods, where models are trained on labeled or unlabeled datasets, RL agents learn through trial and error, receiving feedback in the form of rewards or penalties. For that, it uses several components:

1. Agent

The focal point of reinforcement learning, the agent, is the entity making decisions and taking actions within the defined environment. It can be a software agent controlling a robot, a game-playing algorithm, or any system designed to learn and adapt.

2. Environment

The external system or context within which the agent operates is known as the environment. It is the dynamic space where the agent interacts, makes decisions, and receives feedback. Environments can range from virtual simulations to real-world scenarios.

3. Actions

Actions represent the decisions or moves that the agent can take within the environment. These actions are the levers through which the agent influences its surroundings. The set of possible actions is defined by the problem domain and the specific application.

4. Rewards

Rewards serve as the feedback mechanism for the agent. After taking an action, the agent receives a reward or a penalty based on the outcome. The goal of the agent is to learn to take actions that maximize cumulative rewards over time.

5. Policies

A policy is the strategy or set of rules that the agent follows to determine its actions in a given state. It maps the observed states of the environment to the actions that the agent should take. Developing an optimal policy is the primary objective of reinforcement learning.

The learning process in reinforcement learning

Reinforcement learning stands out for its unique learning process, characterized by a nuanced interplay between exploration and exploitation. This delicate balance, coupled with a fundamental reliance on trial and error, distinguishes RL from other machine learning paradigms.

1. Exploration vs. exploitation

In the pursuit of optimal strategies, RL agents face a constant dilemma: whether to explore new actions to uncover their effects or to exploit known actions to maximize immediate rewards. Striking the right balance is crucial for the agent’s long-term learning and performance.

In the context of reinforcement learning, this scenario is called the “multi-armed bandit problem.” The “arms” represent different actions or choices that an agent (like a robot, algorithm, or decision-making system) can take. The agent needs to learn which action is the most rewarding over time.

- Exploration. This involves trying out new actions, even those with uncertain outcomes. It allows the agent to gather information about the environment and discover potentially better strategies. However, excessive exploration may hinder short-term rewards.

- Exploitation. Exploiting involves choosing actions that are known to yield higher rewards based on the agent’s current understanding. While this can lead to immediate gains, over-reliance on exploitation may cause the agent to miss out on discovering more optimal strategies.

Finding the optimal trade-off between exploration and exploitation is a central challenge in RL. Various exploration strategies, such as epsilon-greedy policies or Thompson sampling, are employed to manage this delicate balance.

2. Trial and error

At the heart of RL is the concept of learning through trial and error. The agent interacts with its environment, taking actions and observing the subsequent outcomes. The learning process unfolds as the agent refines its strategies based on the feedback received in the form of rewards or penalties.

- Taking actions. The agent selects actions based on its current policy, influencing the state of the environment.

- Observing outcomes. The environment reacts to the agent’s actions, resulting in a new state. The agent then receives feedback in the form of a reward or penalty based on the outcome.

- Adjusting strategies. The agent updates its understanding of the environment and refines its decision-making strategies. This iterative process continues as the agent hones its ability to make better decisions over time.

Trial and error is a fundamental aspect of RL, allowing the agent to adapt to dynamic and complex environments. It leverages the cumulative experience gained from interactions to iteratively improve decision-making strategies.

Challenges of reinforcement learning

Reinforcement learning poses certain challenges for the developers:

- Exploration strategies. Designing effective exploration strategies is a nuanced task. Balancing curiosity with the need for optimal performance requires thoughtful consideration and experimentation. There are various exploration strategies: Epsilon-Greedy, Softmax Exploration, Upper Confidence Bound (UCB), etc. There are no ways so far to choose one without previous experience/experimentation.

- Overcoming local optima. In complex environments, the agent may settle into suboptimal solutions, known as local optima. Effective exploration strategies are crucial to prevent getting stuck in these less-than-ideal states. Local optima can be detected easily, there are various ways to beat them (depending on the strategy and the setup). Usually the problem relates to more aggressive exploration or some extra randomness for a few steps.

- Handling uncertainty. RL must contend with uncertainty in the environment. Strategies for dealing with unknowns and adapting to changing conditions are pivotal for successful learning.

Dealing with all these things means building your own RL-based system, so there is no universal recipe here.

Reinforcement learning algorithms

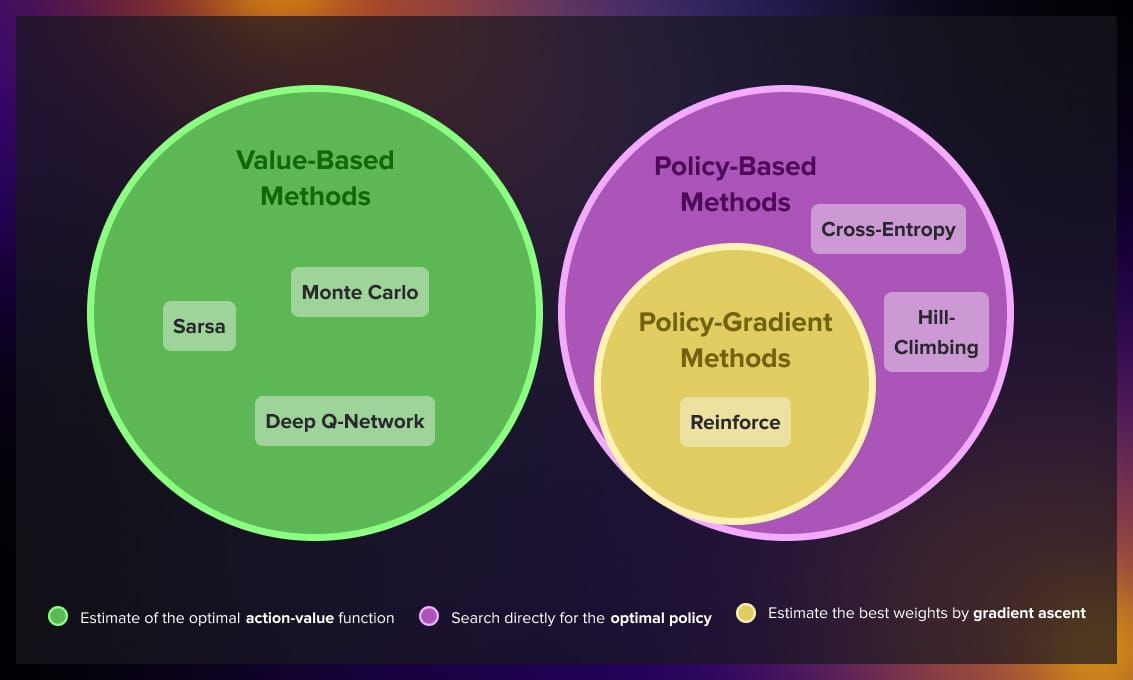

There are several algorithms that are commonly used for reinforcement learning:

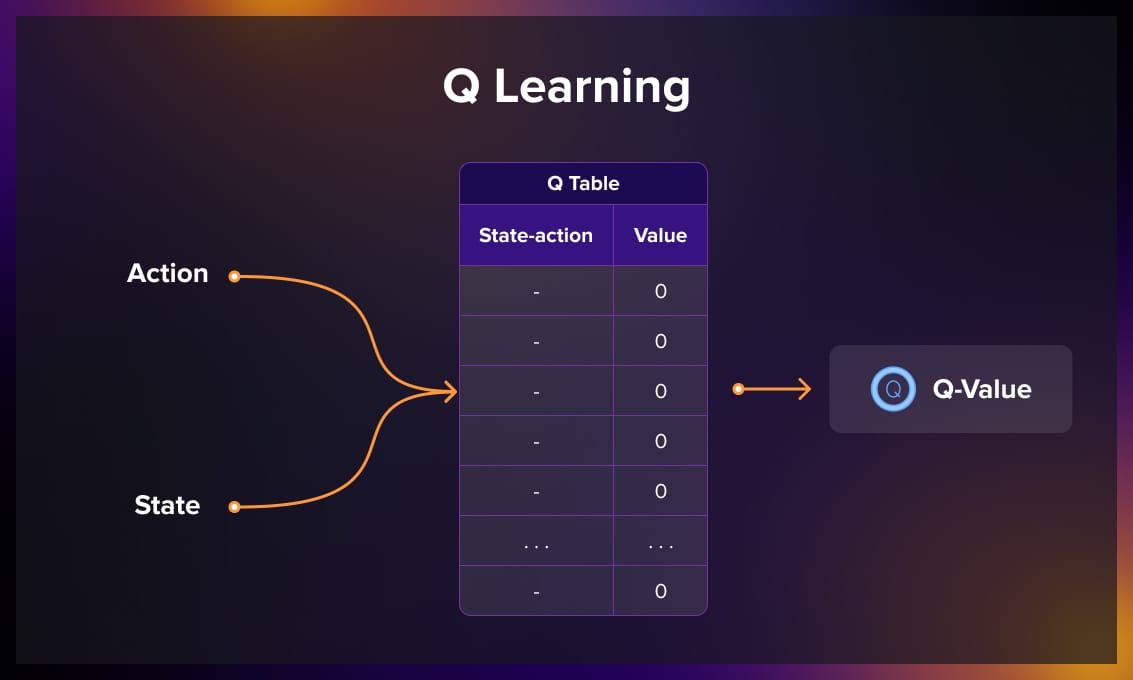

Q-Learning

Q-Learning is a foundational RL algorithm that estimates the value of taking a particular action in a given state. It updates its Q-values iteratively to converge towards optimal strategies.

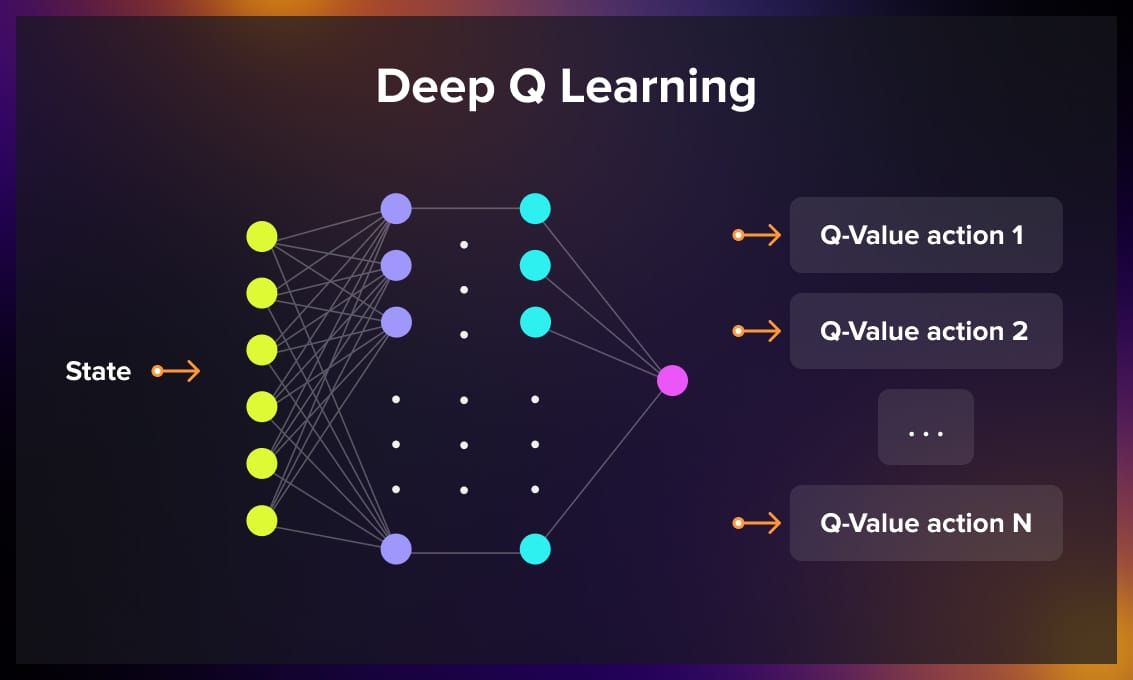

Deep Q Network (DQN)

DQN combines Q-Learning with deep neural networks, allowing RL to be applied to more complex problems. It excels in scenarios where traditional methods may struggle.

Policy gradient methods

These methods directly learn the policy by adjusting its parameters to increase the likelihood of actions leading to higher rewards. REINFORCE is a popular algorithm in this category.

Real-world examples

Reinforcement learning is commonly used for solving many real-world problems.

Game playing

Games, with their intricate dynamics and unpredictable scenarios, serve as a proving ground for RL algorithms. A standout example is AlphaGo, developed by DeepMind, which stunned the world by defeating the world champion in the ancient game of Go. OpenAI’s Dota 2 bots showcased another feat, mastering the complexities of multiplayer online battle arenas through continuous learning. RL’s ability to grasp optimal strategies through repeated interactions makes it a natural fit for the dynamic and strategic nature of game environments.

Robotics

In the field of robotics, RL takes center stage, empowering machines to learn and adapt in the physical world. Unlike traditional programming approaches, RL allows robots to grasp objects, navigate complex environments, and control movements by learning from their interactions. Consider scenarios where a robot learns to manipulate objects with dexterity or fine-tunes its movements for efficient locomotion. RL provides the flexibility needed to navigate the uncertainties inherent in the real world, marking a paradigm shift in how machines are trained to perform tasks in dynamic environments. One example is Boston Dynamics’ robots, known for utilizing RL to achieve remarkable dexterity and adaptability.

Autonomous systems

The emergence of autonomous systems, from self-driving cars to drones, owes much to the capabilities of reinforcement learning. RL is instrumental in training these systems to make real-time decisions based on environmental feedback. For self-driving cars, RL enables adaptive decision-making in response to changing traffic conditions, ensuring a safer and more efficient driving experience. Similarly, drones equipped with RL algorithms can dynamically adapt to diverse terrains and unexpected obstacles, showcasing the adaptability required in autonomous aerial systems.

Resource management

In resource management, where optimization is paramount, reinforcement learning proves its mettle. Applications span across smart grids, traffic signal control, and inventory management. RL algorithms can learn to optimize energy consumption in smart grids by adapting to fluctuating demand patterns.

How is reinforcement learning different from other ML techniques?

In supervised learning, the algorithm is trained on a labeled dataset, where each input is paired with its corresponding output. The model generalizes from the labeled examples to make predictions or classifications on new, unseen data. It is commonly applied in tasks such as image recognition, speech recognition, and natural language processing, where the algorithm learns patterns from labeled data.

In unsupervised learning, training models happen on unlabeled data, with the algorithm tasked to identify patterns, relationships, or structures within the data. The model discovers hidden structures or groupings in the data without predefined output labels. Some of the use cases include clustering, dimensionality reduction, and anomaly detection.

Reinforcement learning focuses on training agents to make sequential decisions in an environment by receiving feedback in the form of rewards or penalties. The agent aims to learn a policy that maximizes cumulative rewards over time, refining its decision-making through trial and error. It is particularly effective in scenarios where an agent interacts with an environment, such as robotics, game playing, and autonomous systems. RL excels in dynamic, unpredictable environments, where predefined solutions may not exist.

You can find more information in our detailed guide to ML techniques.

Conclusion

Reinforcement learning empowers developers to create intelligent systems capable of learning and adapting to dynamic environments. By integrating RL into your projects, you can enable your systems to make decisions and take actions based on their experiences and the feedback they receive. This makes reinforcement learning particularly valuable in situations where traditional rule-based systems or supervised learning approaches may struggle.

.jpg)

.jpg)

.jpg)